Data Strategy

Data Strategy

Data Governance

- Data Quality

- Data Catalog

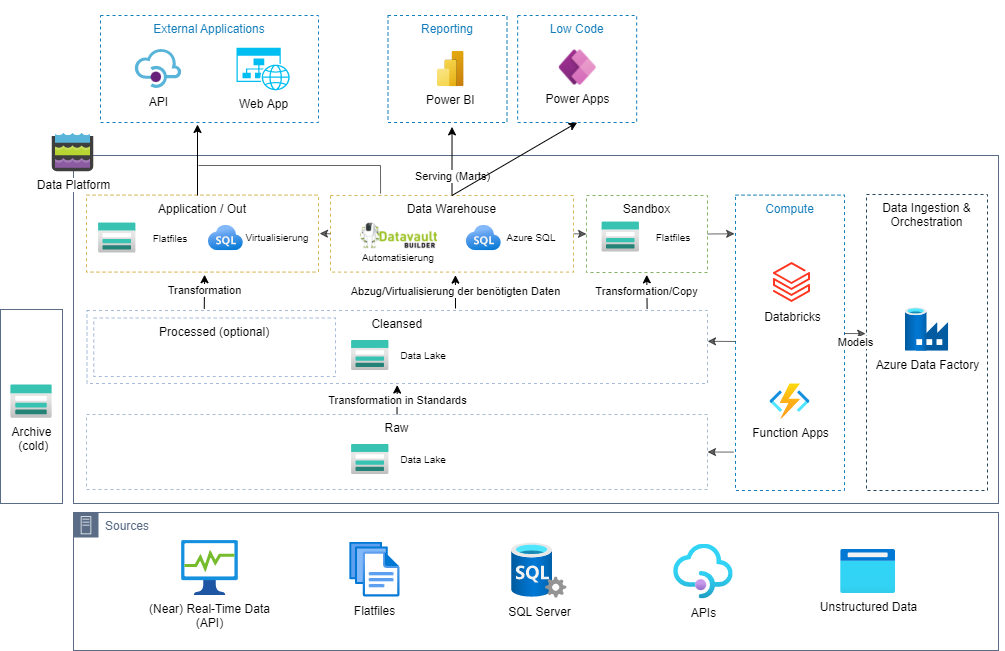

Data Platform

Agile BI

Data Driven Company

Hitchhikers Guide to the Data Galaxy®

Data Governance

- Data Quality

- Data Catalog

Data Platform

Agile BI

Data Driven Company

Hitchhikers Guide to the Data Galaxy®

Data Analytics

Visual Analytics

Information Design

Self-Service BI

IBCS Reporting

Reporting

Dashboarding

Sports Analytics

- Sports Analytics with AWS

Information Design

Self-Service BI

IBCS Reporting

Reporting

Dashboarding

Sports Analytics

- Sports Analytics with AWS

Cloud Computing

Modern Cloud Data Stack

Cloud Migration

Cloud DWH

Cloud Engineering

Cloud Reference-Architectures

Microsoft Azure Cloud Solutions

AWS

Multicloud

Cloud Migration

Cloud DWH

Cloud Engineering

Cloud Reference-Architectures

Microsoft Azure Cloud Solutions

AWS

Multicloud

Operations

Data Platform Operations

Data Infrastructure Op.

Managed Cloud Services

Managed Cloud Applications

Monitoring

Data Infrastructure Op.

Managed Cloud Services

Managed Cloud Applications

Monitoring

Data Science

Ethic in AI

AI Architecture

People Analytics

Machine Learning

Deep Learning

NLP

Predictive Analytics

AI Architecture

People Analytics

Machine Learning

Deep Learning

NLP

Predictive Analytics

Data Engineering

Data Engineering

Cloud Engineering

DevOps Engineering

Data Pipelines

Data Streams

CI/CD

Cloud Engineering

DevOps Engineering

Data Pipelines

Data Streams

CI/CD

Data Integration

Matillion

- Snowflake & Matillion

MS Azure Data Factory

dbt

Theobald Software

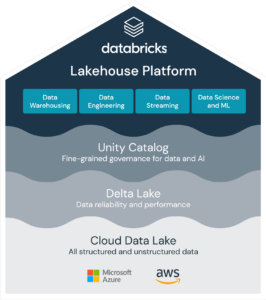

Databricks

Apache Kafka / Airflow

- Snowflake & Matillion

MS Azure Data Factory

dbt

Theobald Software

Databricks

Apache Kafka / Airflow

areto Applications

Data Quality Framework

Golden Record Framework

Data Migration Framework

Cloud Applications Framework

Monitoring Framework

Jetvault Framework

Golden Record Framework

Data Migration Framework

Cloud Applications Framework

Monitoring Framework

Jetvault Framework